Proof That Deepseek Actually Works

페이지 정보

작성자 Gerard 작성일25-02-03 07:11 조회8회 댓글0건관련링크

본문

Let's delve into the options and architecture that make DeepSeek V3 a pioneering model in the field of artificial intelligence. ChatGPT gives a free tier, however you will have to pay a month-to-month subscription for premium features. It has by no means did not occur; you want solely look at the cost of disks (and their efficiency) over that period of time for examples. Trained on an enormous 2 trillion tokens dataset, with a 102k tokenizer enabling bilingual efficiency in English and Chinese, DeepSeek-LLM stands out as a robust model for language-associated AI duties. The newest DeepSeek mannequin also stands out because its "weights" - the numerical parameters of the mannequin obtained from the training course of - have been openly launched, along with a technical paper describing the model's improvement process. In the realm of chopping-edge AI know-how, DeepSeek V3 stands out as a remarkable advancement that has garnered the eye of AI aficionados worldwide. Within the realm of AI advancements, DeepSeek V2.5 has made significant strides in enhancing each efficiency and accessibility for users. Inside the DeepSeek mannequin portfolio, every mannequin serves a distinct function, showcasing the versatility and specialization that DeepSeek brings to the realm of AI growth.

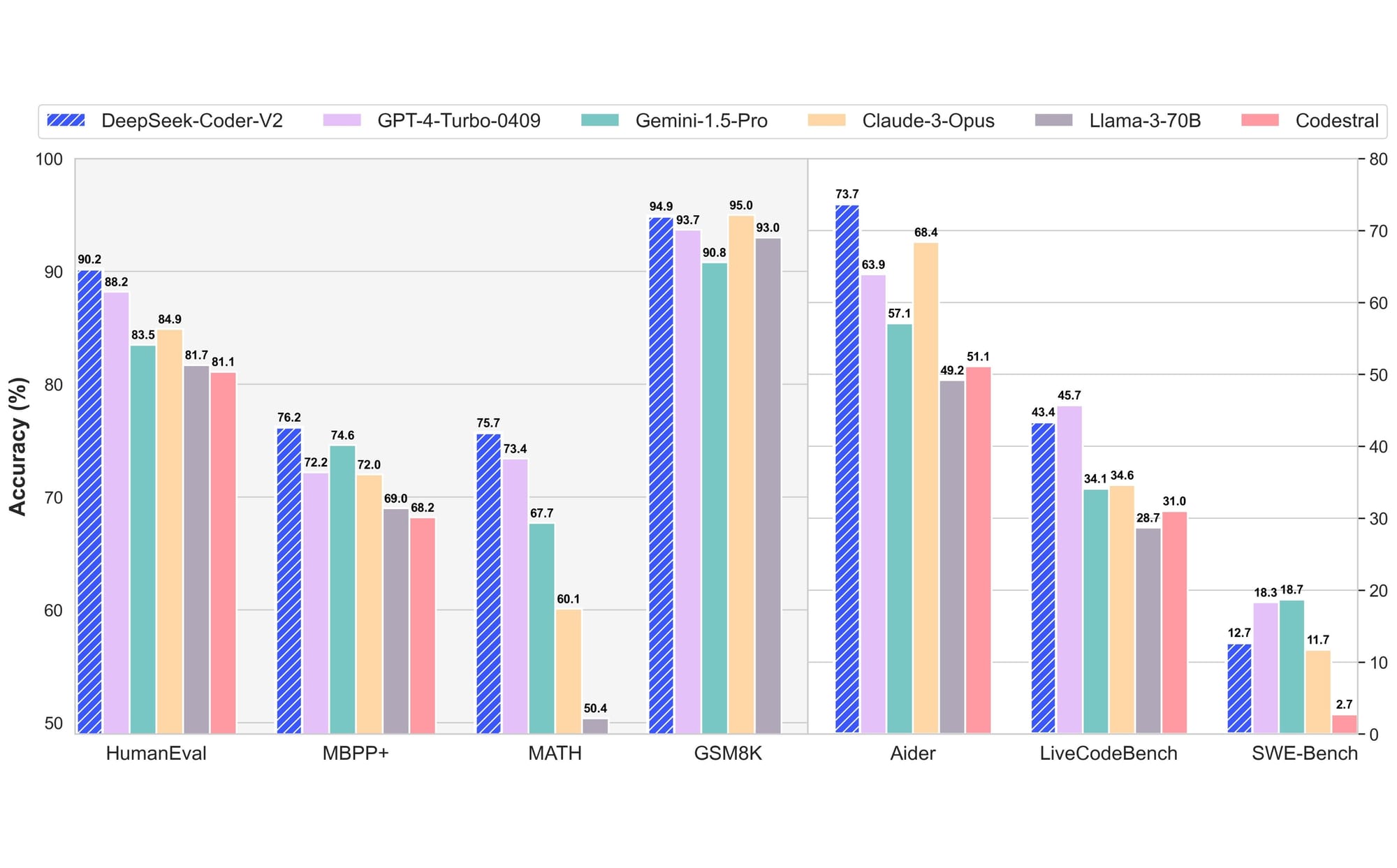

Diving into the various range of models inside the deepseek ai china portfolio, we come throughout modern approaches to AI improvement that cater to numerous specialized duties. Mathematical reasoning is a major challenge for language fashions as a result of complicated and structured nature of arithmetic. DeepSeek-Coder-V2, an open-source Mixture-of-Experts (MoE) code language mannequin. By embracing the MoE structure and advancing from Llama 2 to Llama 3, DeepSeek V3 units a new customary in subtle AI fashions. Its chat version additionally outperforms different open-supply fashions and achieves efficiency comparable to leading closed-source models, including GPT-4o and Claude-3.5-Sonnet, on a collection of standard and open-ended benchmarks. Through internal evaluations, DeepSeek-V2.5 has demonstrated enhanced win charges in opposition to fashions like GPT-4o mini and ChatGPT-4o-newest in duties akin to content material creation and Q&A, thereby enriching the general user experience. DeepSeek-Coder is a model tailor-made for code technology tasks, focusing on the creation of code snippets efficiently. Whether it's leveraging a Mixture of Experts method, focusing on code generation, or excelling in language-specific tasks, DeepSeek fashions supply chopping-edge solutions for diverse AI challenges. This mannequin adopts a Mixture of Experts method to scale up parameter depend effectively.

Diving into the various range of models inside the deepseek ai china portfolio, we come throughout modern approaches to AI improvement that cater to numerous specialized duties. Mathematical reasoning is a major challenge for language fashions as a result of complicated and structured nature of arithmetic. DeepSeek-Coder-V2, an open-source Mixture-of-Experts (MoE) code language mannequin. By embracing the MoE structure and advancing from Llama 2 to Llama 3, DeepSeek V3 units a new customary in subtle AI fashions. Its chat version additionally outperforms different open-supply fashions and achieves efficiency comparable to leading closed-source models, including GPT-4o and Claude-3.5-Sonnet, on a collection of standard and open-ended benchmarks. Through internal evaluations, DeepSeek-V2.5 has demonstrated enhanced win charges in opposition to fashions like GPT-4o mini and ChatGPT-4o-newest in duties akin to content material creation and Q&A, thereby enriching the general user experience. DeepSeek-Coder is a model tailor-made for code technology tasks, focusing on the creation of code snippets efficiently. Whether it's leveraging a Mixture of Experts method, focusing on code generation, or excelling in language-specific tasks, DeepSeek fashions supply chopping-edge solutions for diverse AI challenges. This mannequin adopts a Mixture of Experts method to scale up parameter depend effectively.

This approach enables DeepSeek V3 to realize performance levels comparable to dense fashions with the identical variety of total parameters, despite activating only a fraction of them.

This approach enables DeepSeek V3 to realize performance levels comparable to dense fashions with the identical variety of total parameters, despite activating only a fraction of them.

댓글목록

등록된 댓글이 없습니다.