How to Make Your Deepseek Ai Look Amazing In Eight Days

페이지 정보

작성자 Kirk 작성일25-02-04 18:11 조회12회 댓글0건관련링크

본문

Two days in the past, it was solely accountable for Nvidia’s document-breaking $589 billion market cap loss. Moreover, The brand new York Times had already identified that it's a "cosmic stage" euphemism nearly two years ago, when a previous Starship exploded10. DeepSeek’s new AI mannequin has taken the world by storm, with its eleven instances lower computing price than leading-edge fashions. The arrival of DeepSeek has shown the US may not be the dominant market leader in AI many thought it to be, and that leading edge AI fashions may be constructed and trained for less than first thought. And on the hardware facet, DeepSeek has discovered new ways to juice previous chips, allowing it to practice high-tier models with out coughing up for the newest hardware available on the market. Sales of those chips to China have since been restricted, but DeepSeek says its latest AI models have been constructed using lower-performing Nvidia chips not banned in China - a revelation which has part-fuelled the upending of the inventory market, selling the concept that the most expensive hardware might not be needed for cutting edge AI growth. However, the main target of AI R&D varied relying on cities and local industrial growth and ecosystem.

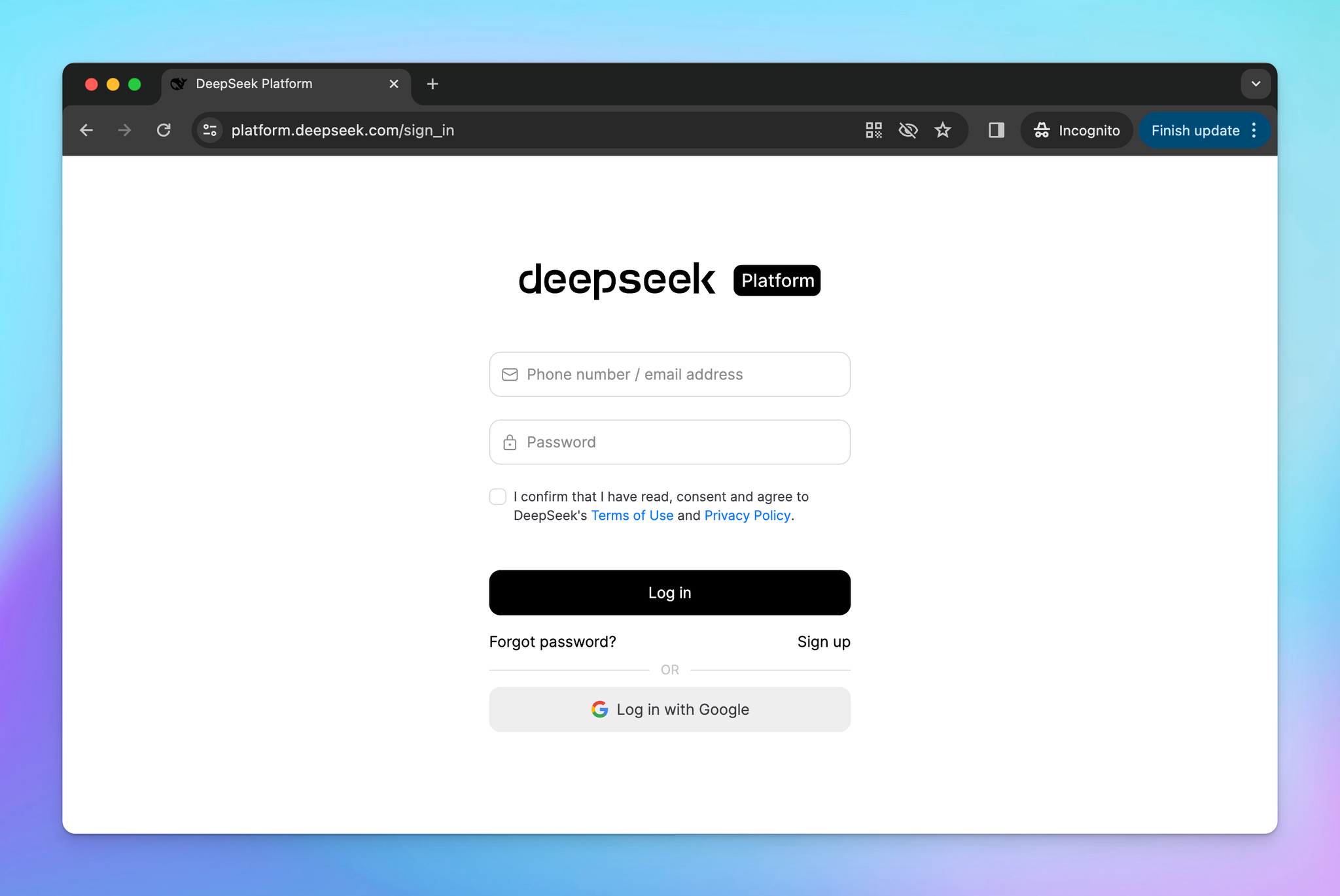

The information has all the things AMD customers have to get DeepSeek R1 operating on their local (supported) machine. Winner: While ChatGPT guarantees its customers thorough assistance, DeepSeek supplies quick, concise guides that experienced programmers and builders might choose. Based on reports, DeepSeek is powered by an open source mannequin known as R1 which its builders declare was educated for around six million US dollars (roughly €5.7 million) - although this declare has been disputed by others in the AI sector - and the way exactly the builders did this nonetheless stays unclear. It isn't simply explicit disjunctions that can be utilized to interrupt an issue down into instances; in truth, every one of many six clues in the above puzzle may be so used, but that is a sophisticated subject for another time. This apparent cost-effective approach, and using broadly accessible know-how to supply - it claims - near business-main results for a chatbot, is what has turned the established AI order the wrong way up. LM Studio has a one-click on installer tailor-made for Ryzen AI, which is the tactic AMD users will use to put in R1.

I pull the DeepSeek Coder model and use the Ollama API service to create a immediate and get the generated response. Running Ollama in both twin boot. I'm running on a desktop and a mini pc. The mini pc has a 8845hs, 64gb RAM, and 780m inside gasoline graphics. The desktop has a 7700x, 64gb RAM, AND A7800XT. Similarly, Ryzen 8040 and 7040 collection mobile APUs are geared up with 32GB of RAM, and the Ryzen AI HX 370 and 365 with 24GB and 32GB of RAM can assist as much as "DeepSeek site-R1-Distill-Llama-14B". For example, when requested, "What mannequin are you?" it responded, "ChatGPT, primarily based on the GPT-four architecture." This phenomenon, known as "identity confusion," happens when an LLM misidentifies itself. It almost feels just like the character or post-coaching of the model being shallow makes it feel like the mannequin has extra to supply than it delivers. In simple terms, DeepSeek is an AI chatbot app that may reply questions and queries much like ChatGPT, Google's Gemini and others. I was creating simple interfaces using just Flexbox. In consequence, Silicon Valley has been left to ponder if innovative AI might be obtained with out necessarily using the newest, and most costly, tech to construct it.

I pull the DeepSeek Coder model and use the Ollama API service to create a immediate and get the generated response. Running Ollama in both twin boot. I'm running on a desktop and a mini pc. The mini pc has a 8845hs, 64gb RAM, and 780m inside gasoline graphics. The desktop has a 7700x, 64gb RAM, AND A7800XT. Similarly, Ryzen 8040 and 7040 collection mobile APUs are geared up with 32GB of RAM, and the Ryzen AI HX 370 and 365 with 24GB and 32GB of RAM can assist as much as "DeepSeek site-R1-Distill-Llama-14B". For example, when requested, "What mannequin are you?" it responded, "ChatGPT, primarily based on the GPT-four architecture." This phenomenon, known as "identity confusion," happens when an LLM misidentifies itself. It almost feels just like the character or post-coaching of the model being shallow makes it feel like the mannequin has extra to supply than it delivers. In simple terms, DeepSeek is an AI chatbot app that may reply questions and queries much like ChatGPT, Google's Gemini and others. I was creating simple interfaces using just Flexbox. In consequence, Silicon Valley has been left to ponder if innovative AI might be obtained with out necessarily using the newest, and most costly, tech to construct it.

If you loved this posting and you would like to acquire additional information regarding Deepseek Ai kindly check out our web-page.

댓글목록

등록된 댓글이 없습니다.