I Saw This Terrible Information About Deepseek And i Needed to Google …

페이지 정보

작성자 Murray 작성일25-02-07 10:40 조회11회 댓글0건관련링크

본문

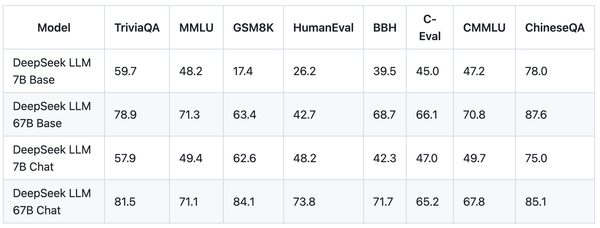

On Jan. 20, 2025, DeepSeek launched its R1 LLM at a fraction of the fee that different distributors incurred in their very own developments. Developed by the Chinese AI startup DeepSeek, R1 has been in comparison with industry-leading models like OpenAI's o1, providing comparable efficiency at a fraction of the fee. Twilio SendGrid's cloud-based mostly e mail infrastructure relieves businesses of the associated fee and complexity of sustaining customized electronic mail programs. It runs on the delivery infrastructure that powers MailChimp. LoLLMS Web UI, an excellent internet UI with many attention-grabbing and unique features, including a full mannequin library for straightforward model choice. KoboldCpp, a totally featured web UI, with GPU accel across all platforms and GPU architectures. You'll be able to ask it to search the online for related data, decreasing the time you'd have spent in search of it yourself. DeepSeek's advancements have precipitated important disruptions in the AI business, resulting in substantial market reactions. Based on third-celebration benchmarks, DeepSeek's performance is on par with, and even superior to, state-of-the-art models from OpenAI and Meta in sure domains.

Notably, it even outperforms o1-preview on specific benchmarks, akin to MATH-500, demonstrating its strong mathematical reasoning capabilities. The paper attributes the robust mathematical reasoning capabilities of DeepSeekMath 7B to two key elements: the extensive math-related data used for pre-coaching and the introduction of the GRPO optimization method. Optimization of structure for better compute effectivity. DeepSeek indicates that China’s science and know-how insurance policies could also be working better than we've got given them credit score for. However, unlike ChatGPT, which only searches by relying on certain sources, this function might also reveal false info on some small websites. This will not be a complete list; if you understand of others, please let me know! Python library with GPU accel, LangChain support, and OpenAI-appropriate API server. Python library with GPU accel, LangChain help, and OpenAI-suitable AI server. LM Studio, an easy-to-use and powerful native GUI for Windows and macOS (Silicon), with GPU acceleration. Remove it if you don't have GPU acceleration. Members of Congress have already called for an enlargement of the chip ban to encompass a wider vary of technologies. The U.S. Navy has instructed its members not to use DeepSeek apps or technology, based on CNBC.

Notably, it even outperforms o1-preview on specific benchmarks, akin to MATH-500, demonstrating its strong mathematical reasoning capabilities. The paper attributes the robust mathematical reasoning capabilities of DeepSeekMath 7B to two key elements: the extensive math-related data used for pre-coaching and the introduction of the GRPO optimization method. Optimization of structure for better compute effectivity. DeepSeek indicates that China’s science and know-how insurance policies could also be working better than we've got given them credit score for. However, unlike ChatGPT, which only searches by relying on certain sources, this function might also reveal false info on some small websites. This will not be a complete list; if you understand of others, please let me know! Python library with GPU accel, LangChain support, and OpenAI-appropriate API server. Python library with GPU accel, LangChain help, and OpenAI-suitable AI server. LM Studio, an easy-to-use and powerful native GUI for Windows and macOS (Silicon), with GPU acceleration. Remove it if you don't have GPU acceleration. Members of Congress have already called for an enlargement of the chip ban to encompass a wider vary of technologies. The U.S. Navy has instructed its members not to use DeepSeek apps or technology, based on CNBC.

Rust ML framework with a give attention to efficiency, together with GPU assist, and ease of use. Change -ngl 32 to the variety of layers to offload to GPU. Change -c 2048 to the specified sequence length. For prolonged sequence models - eg 8K, 16K, 32K - the required RoPE scaling parameters are read from the GGUF file and set by llama.cpp routinely. Ensure you're using llama.cpp from commit d0cee0d or later. GGUF is a brand new format introduced by the llama.cpp crew on August twenty first 2023. It is a substitute for GGML, which is no longer supported by llama.cpp. Here is how you should use the Claude-2 model as a drop-in alternative for GPT fashions. That appears very flawed to me, I’m with Roon that superhuman outcomes can positively end result. It was released in December 2024. It could actually reply to person prompts in natural language, reply questions throughout varied academic and skilled fields, and carry out tasks akin to writing, enhancing, coding, and data evaluation. The DeepSeek-R1, which was launched this month, focuses on complex tasks akin to reasoning, coding, and maths. We’ve formally launched DeepSeek-V2.5 - a strong mixture of DeepSeek-V2-0628 and DeepSeek-Coder-V2-0724! Compare options, costs, accuracy, and performance to search out the perfect AI chatbot on your wants.

Multiple quantisation parameters are supplied, to permit you to choose the very best one in your hardware and requirements. Multiple different quantisation formats are supplied, and most users solely need to select and obtain a single file. Multiple GPTQ parameter permutations are provided; see Provided Files beneath for particulars of the options supplied, their parameters, and the software used to create them. This repo contains GPTQ mannequin information for DeepSeek's Deepseek Coder 33B Instruct. This repo incorporates GGUF format mannequin recordsdata for DeepSeek's Deepseek Coder 6.7B Instruct. Note for guide downloaders: You nearly by no means wish to clone the whole repo! K - "kind-0" 3-bit quantization in super-blocks containing sixteen blocks, each block having sixteen weights. K - "kind-1" 4-bit quantization in tremendous-blocks containing eight blocks, each block having 32 weights. K - "sort-1" 2-bit quantization in super-blocks containing sixteen blocks, every block having 16 weight. Super-blocks with sixteen blocks, each block having sixteen weights. Block scales and mins are quantized with 4 bits. Scales are quantized with 6 bits.

For more regarding ديب سيك شات look at the webpage.

댓글목록

등록된 댓글이 없습니다.