Find out how to Earn $1,000,000 Using Deepseek

페이지 정보

작성자 Sue 작성일25-02-08 16:25 조회7회 댓글0건관련링크

본문

Keep an eye on bulletins from DeepSeek in case a mobile app is released sooner or later. On January 20, 2025, DeepSeek released DeepSeek-R1 and شات DeepSeek DeepSeek-R1-Zero. In addition they launched DeepSeek-R1-Distill models, which had been tremendous-tuned utilizing different pretrained fashions like LLaMA and Qwen. It was designed to compete with AI models like Meta’s Llama 2 and confirmed higher performance than many open-source AI models at that time. This model has been positioned as a competitor to main models like OpenAI’s GPT-4, with notable distinctions in cost effectivity and performance. Its effectivity earned it recognition, with the University of Waterloo’s Tiger Lab rating it seventh on its LLM leaderboard. But the DeepSeek growth might level to a path for the Chinese to catch up more shortly than beforehand thought. Overall, the CodeUpdateArena benchmark represents an necessary contribution to the ongoing efforts to enhance the code technology capabilities of giant language models and make them more sturdy to the evolving nature of software program improvement.

Keep an eye on bulletins from DeepSeek in case a mobile app is released sooner or later. On January 20, 2025, DeepSeek released DeepSeek-R1 and شات DeepSeek DeepSeek-R1-Zero. In addition they launched DeepSeek-R1-Distill models, which had been tremendous-tuned utilizing different pretrained fashions like LLaMA and Qwen. It was designed to compete with AI models like Meta’s Llama 2 and confirmed higher performance than many open-source AI models at that time. This model has been positioned as a competitor to main models like OpenAI’s GPT-4, with notable distinctions in cost effectivity and performance. Its effectivity earned it recognition, with the University of Waterloo’s Tiger Lab rating it seventh on its LLM leaderboard. But the DeepSeek growth might level to a path for the Chinese to catch up more shortly than beforehand thought. Overall, the CodeUpdateArena benchmark represents an necessary contribution to the ongoing efforts to enhance the code technology capabilities of giant language models and make them more sturdy to the evolving nature of software program improvement.

Overall, below such a communication technique, only 20 SMs are sufficient to completely make the most of the bandwidths of IB and NVLink. " second, however by the time i saw early previews of SD 1.5 i was by no means impressed by an image mannequin again (even though e.g. midjourney’s customized fashions or flux are much better. This integration resulted in a unified mannequin with significantly enhanced performance, providing better accuracy and versatility in both conversational AI and coding tasks. The DeepSeek-R1 mannequin was educated using thousands of artificial reasoning knowledge and non-reasoning tasks like writing and translation. It was skilled utilizing 8.1 trillion phrases and designed to handle complicated duties like reasoning, coding, and answering questions accurately. It was skilled utilizing 1.8 trillion phrases of code and textual content and came in several variations. This version was educated utilizing 500 billion phrases of math-related textual content and included fashions fantastic-tuned with step-by-step problem-solving techniques. Compressor abstract: The textual content discusses the safety risks of biometric recognition resulting from inverse biometrics, which permits reconstructing artificial samples from unprotected templates, and critiques strategies to assess, evaluate, and mitigate these threats. They used synthetic information for coaching and applied a language consistency reward to ensure that the mannequin would reply in a single language.

Overall, below such a communication technique, only 20 SMs are sufficient to completely make the most of the bandwidths of IB and NVLink. " second, however by the time i saw early previews of SD 1.5 i was by no means impressed by an image mannequin again (even though e.g. midjourney’s customized fashions or flux are much better. This integration resulted in a unified mannequin with significantly enhanced performance, providing better accuracy and versatility in both conversational AI and coding tasks. The DeepSeek-R1 mannequin was educated using thousands of artificial reasoning knowledge and non-reasoning tasks like writing and translation. It was skilled utilizing 8.1 trillion phrases and designed to handle complicated duties like reasoning, coding, and answering questions accurately. It was skilled utilizing 1.8 trillion phrases of code and textual content and came in several variations. This version was educated utilizing 500 billion phrases of math-related textual content and included fashions fantastic-tuned with step-by-step problem-solving techniques. Compressor abstract: The textual content discusses the safety risks of biometric recognition resulting from inverse biometrics, which permits reconstructing artificial samples from unprotected templates, and critiques strategies to assess, evaluate, and mitigate these threats. They used synthetic information for coaching and applied a language consistency reward to ensure that the mannequin would reply in a single language.

One of many standout features of DeepSeek-R1 is its clear and competitive pricing model. Regular Updates: Stay forward with new features and enhancements rolled out constantly. Considered one of the biggest challenges in theorem proving is determining the precise sequence of logical steps to solve a given downside. "The know-how race with the Chinese Communist Party is just not one the United States can afford to lose," LaHood mentioned in a statement. DeepSeek AI is a Chinese synthetic intelligence firm headquartered in Hangzhou, Zhejiang. Founded in 2023, this progressive Chinese company has developed a complicated AI mannequin that not only rivals established players but does so at a fraction of the price. Founded by Liang Wenfeng in 2023, the company has gained recognition for its groundbreaking AI model, DeepSeek-R1. DeepSeek-R1 stands out as a powerful reasoning mannequin designed to rival advanced systems from tech giants like OpenAI and Google. DeepSeek-R1 is obtainable in multiple formats, akin to GGUF, authentic, and 4-bit versions, making certain compatibility with numerous use circumstances. We curate our instruction-tuning datasets to incorporate 1.5M instances spanning multiple domains, with every domain employing distinct information creation methods tailor-made to its particular requirements. This desk gives a structured comparison of the performance of DeepSeek-V3 with other models and versions across a number of metrics and domains.

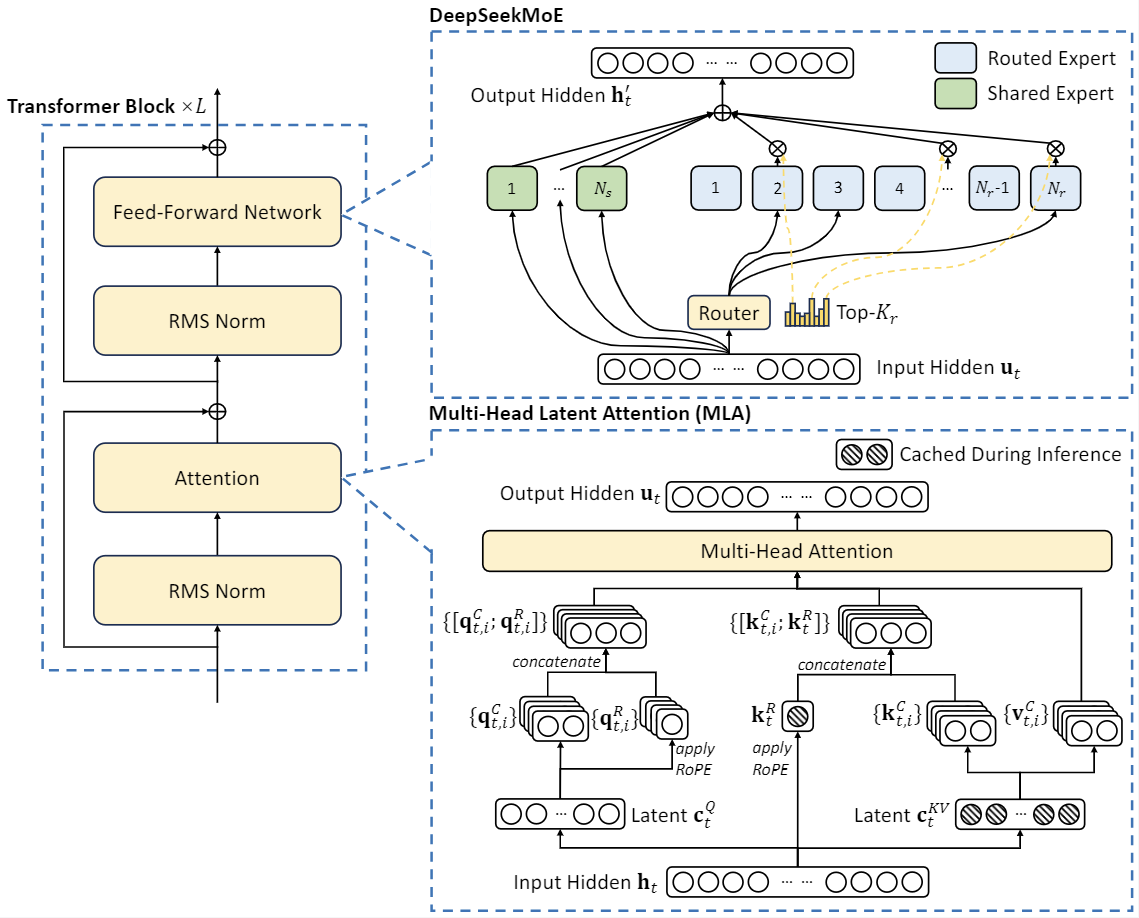

The app offers tiered subscription plans that cater to varying ranges of usage. DeepSeek-V2 brought another of DeepSeek’s innovations - Multi-Head Latent Attention (MLA), a modified consideration mechanism for Transformers that enables quicker info processing with less memory utilization. Launched in May 2024, DeepSeek-V2 marked a big leap ahead in both price-effectiveness and performance. Here is the listing of 5 recently launched LLMs, along with their intro and usefulness. Later, DeepSeek launched DeepSeek-LLM, a common-purpose AI model with 7 billion and 67 billion parameters. OpenAI has been the defacto mannequin provider (along with Anthropic’s Sonnet) for years. No must threaten the model or carry grandma into the immediate. Yet fine tuning has too high entry point compared to simple API access and immediate engineering. The theory with human researchers is that the technique of doing medium quality analysis will allow some researchers to do top quality research later. Should you look at the statistics, it is quite apparent individuals are doing X on a regular basis. I think open source goes to go in a similar manner, the place open source is going to be nice at doing fashions in the 7, 15, 70-billion-parameters-vary; and they’re going to be great fashions.

If you have any type of inquiries regarding where and just how to utilize ديب سيك شات, you can contact us at the web-site.

댓글목록

등록된 댓글이 없습니다.