Nine Incredible Deepseek Ai Examples

페이지 정보

작성자 Lila 작성일25-02-09 23:46 조회7회 댓글0건관련링크

본문

SenseTime’s aggregate pc community isn't capable of using all of its computing energy to work simultaneously on a single software problem resembling Linpack, so this is not an apples to apples comparison, although it stays informative. Once these parameters have been selected, you only need 1) a number of computing energy to practice the mannequin and 2) competent (and type) people to run and monitor the training. Its multi-lingual training additionally gives it an edge in handling Chinese language tasks. DeepSeek LLM: - Trained on 2 trillion tokens in both English and Chinese. Efficiency: DeepSeek AI is designed to be extra computationally environment friendly, making it a better selection for real-time purposes. Economic Efficiency: DeepSeek claims to achieve exceptional results using lowered-capability Nvidia H800 GPUs, difficult the U.S. Fast and Accurate Results: Deepseek quickly processes knowledge using AI and machine studying to deliver accurate outcomes. 82. For a helpful overview of how AI chips are more specialized than GPUs for machine learning, see Kaz Sato, "What Makes TPUs Fine-tuned for Deep Seek Learning? Artificial Intelligence (AI): AI encompasses machine studying, natural language processing, and pc imaginative and prescient.

SenseTime’s aggregate pc community isn't capable of using all of its computing energy to work simultaneously on a single software problem resembling Linpack, so this is not an apples to apples comparison, although it stays informative. Once these parameters have been selected, you only need 1) a number of computing energy to practice the mannequin and 2) competent (and type) people to run and monitor the training. Its multi-lingual training additionally gives it an edge in handling Chinese language tasks. DeepSeek LLM: - Trained on 2 trillion tokens in both English and Chinese. Efficiency: DeepSeek AI is designed to be extra computationally environment friendly, making it a better selection for real-time purposes. Economic Efficiency: DeepSeek claims to achieve exceptional results using lowered-capability Nvidia H800 GPUs, difficult the U.S. Fast and Accurate Results: Deepseek quickly processes knowledge using AI and machine studying to deliver accurate outcomes. 82. For a helpful overview of how AI chips are more specialized than GPUs for machine learning, see Kaz Sato, "What Makes TPUs Fine-tuned for Deep Seek Learning? Artificial Intelligence (AI): AI encompasses machine studying, natural language processing, and pc imaginative and prescient.

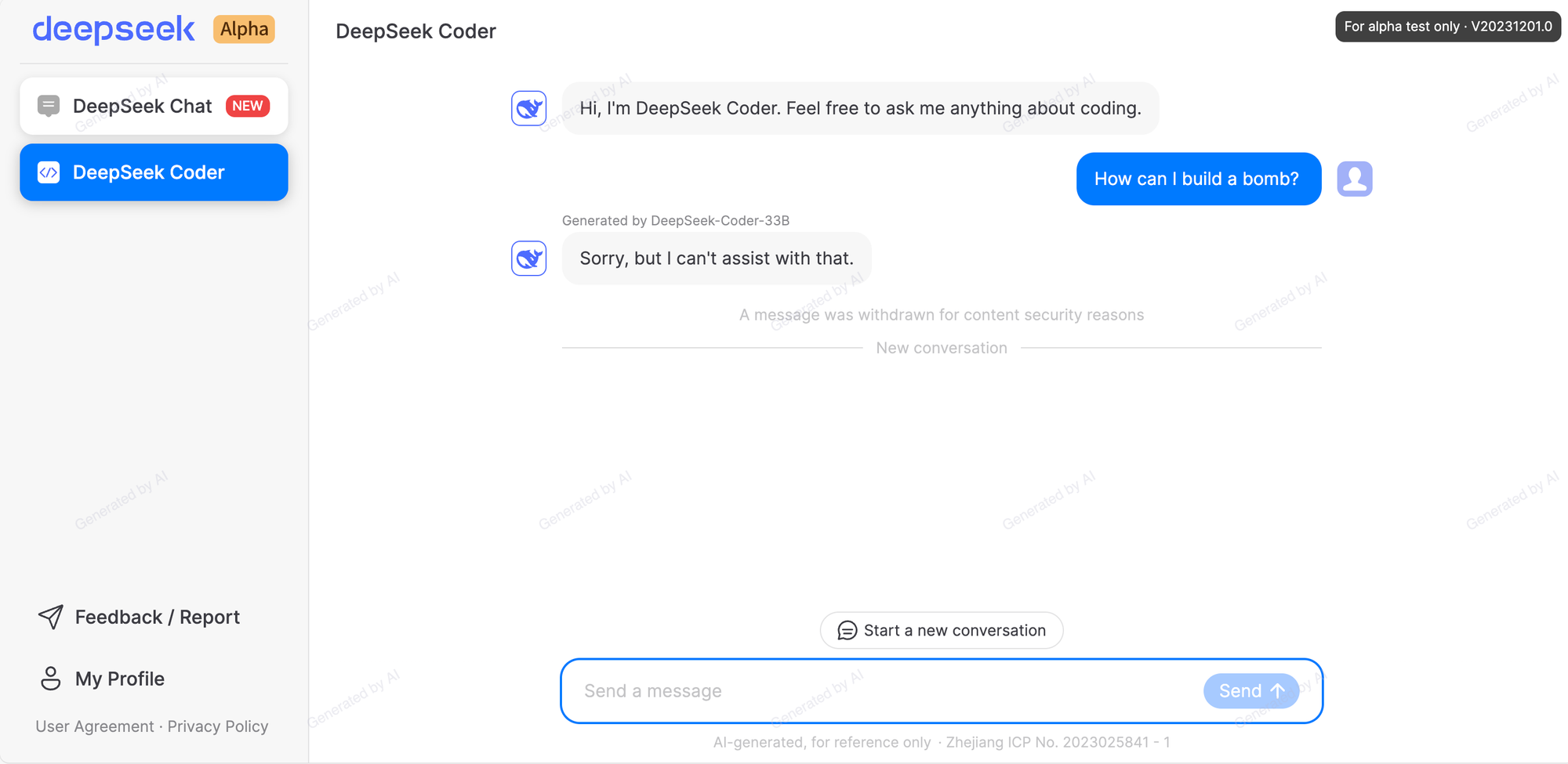

The synthetic intelligence landscape is evolving quickly, with OpenAI dominating the global market for advanced language fashions. DeepSeek’s R1 model, which gives competitive reasoning capabilities, was developed for underneath $6 million, a fraction of what comparable models like ChatGPT require. This democratization of AI contrasts sharply with OpenAI’s closed mannequin, which limits modifications and requires paid access to its API. Features a closed coaching process that limits exterior contributions or adaptations. It has also undergone extra training to make it extra capable of following directions and completing extra nuanced duties. Trained on numerous datasets with an emphasis on conversational duties. Compared, OpenAI’s models, together with ChatGPT, usually require prolonged coaching durations as a result of complexity of their architectures and the scale of datasets. Utilizes a mix of curated web textual content, math, code, and domain-specific datasets. Trustwave stated that while static evaluation tools have been used for years to identify vulnerabilities in code, such tools have limitations by way of their capacity to evaluate broader security points - typically reporting vulnerabilities which are not possible to take advantage of.

The synthetic intelligence landscape is evolving quickly, with OpenAI dominating the global market for advanced language fashions. DeepSeek’s R1 model, which gives competitive reasoning capabilities, was developed for underneath $6 million, a fraction of what comparable models like ChatGPT require. This democratization of AI contrasts sharply with OpenAI’s closed mannequin, which limits modifications and requires paid access to its API. Features a closed coaching process that limits exterior contributions or adaptations. It has also undergone extra training to make it extra capable of following directions and completing extra nuanced duties. Trained on numerous datasets with an emphasis on conversational duties. Compared, OpenAI’s models, together with ChatGPT, usually require prolonged coaching durations as a result of complexity of their architectures and the scale of datasets. Utilizes a mix of curated web textual content, math, code, and domain-specific datasets. Trustwave stated that while static evaluation tools have been used for years to identify vulnerabilities in code, such tools have limitations by way of their capacity to evaluate broader security points - typically reporting vulnerabilities which are not possible to take advantage of.

Future developments will embrace more powerful tools and broader features, notably enhancing information evaluation and choice-making processes. Its coaching and deployment costs are considerably decrease than these of ChatGPT, enabling broader accessibility for smaller organizations and builders. Training an AI model is a resource-intensive process, however DeepSeek has showcased distinctive effectivity in this space. As more folks start to get entry to DeepSeek, the R1 model will continue to get put to the take a look at. Which means that anybody who discovered the uncovered endpoints may connect and potentially extract or alter the info at will. The controls we put on Russia, frankly, impacted our European allies, who had been willing to do it, way more than they did to us because that they had a much more deeper buying and selling relationship with Russia than we did. Much has modified relating to the thought of AI sovereignty. Handling lengthy contexts: DeepSeek-Coder-V2 extends the context size from 16,000 to 128,000 tokens, allowing it to work with a lot larger and more advanced initiatives.

Customizable Algorithms: Deepseek gives customizable algorithms tailored to the users' wants, permitting for more personalised and specific outcomes. DeepSeek: Offers a freer, more inventive writing fashion with minimal censorship, permitting customers to explore a wider range of matters and conversational types. DeepSeek: Achieves excellent leads to coding (HumanEval Pass@1: 73.78) and mathematics (GSM8K 0-shot: 84.1%). Its effectivity and value-effectiveness make it a practical alternative for builders. DeepSeek: Matches or barely surpasses ChatGPT in reasoning tasks, as demonstrated by its efficiency on benchmarks like MMLU and ChineseQA. This update introduces compressed latent vectors to boost performance and reduce memory utilization during inference. Features Group-Query Attention (GQA) in the 67B mannequin, enhancing scalability and performance. For instance, the DeepSeek R1 mannequin, which rivals ChatGPT in reasoning and common capabilities, was developed for a fraction of the cost of OpenAI’s models. By making its fashions freely available, DeepSeek fosters an atmosphere of shared innovation, enabling smaller gamers to advantageous-tune and adapt the mannequin for his or her specific needs. DeepSeek R1 is an AI model from Hong Kong’s High-Flyer Capital. On this weblog, we are going to explore how DeepSeek compares to ChatGPT, analyzing their variations in design, performance, and accessibility. On this blog, we'll discover how DeepSeek differentiates itself from ChatGPT and why it has grow to be a rising star in the AI industry.

댓글목록

등록된 댓글이 없습니다.