What Ancient Greeks Knew About Deepseek That You still Don't

페이지 정보

작성자 Quyen 작성일25-02-13 10:10 조회9회 댓글0건관련링크

본문

It's best to understand that Tesla is in a better place than the Chinese to take advantage of recent methods like those utilized by DeepSeek. The slower the market moves, the extra an advantage. But the DeepSeek improvement might level to a path for the Chinese to catch up extra quickly than beforehand thought. Now we all know precisely how DeepSeek was designed to work, and we could actually have a clue towards its extremely publicized scandal with OpenAI. These two architectures have been validated in DeepSeek-V2 (DeepSeek-AI, 2024c), demonstrating their functionality to take care of robust model efficiency whereas attaining environment friendly coaching and inference. Therefore, by way of architecture, DeepSeek-V3 still adopts Multi-head Latent Attention (MLA) (DeepSeek-AI, 2024c) for environment friendly inference and DeepSeekMoE (Dai et al., 2024) for price-effective training. Beyond closed-supply fashions, open-source models, together with DeepSeek sequence (DeepSeek-AI, 2024b, c; Guo et al., 2024; DeepSeek-AI, 2024a), LLaMA collection (Touvron et al., 2023a, b; AI@Meta, 2024a, b), Qwen sequence (Qwen, 2023, 2024a, 2024b), and Mistral series (Jiang et al., 2023; Mistral, 2024), are additionally making vital strides, endeavoring to shut the gap with their closed-supply counterparts. • We introduce an innovative methodology to distill reasoning capabilities from the long-Chain-of-Thought (CoT) model, particularly from one of many DeepSeek R1 collection fashions, into customary LLMs, significantly DeepSeek-V3.

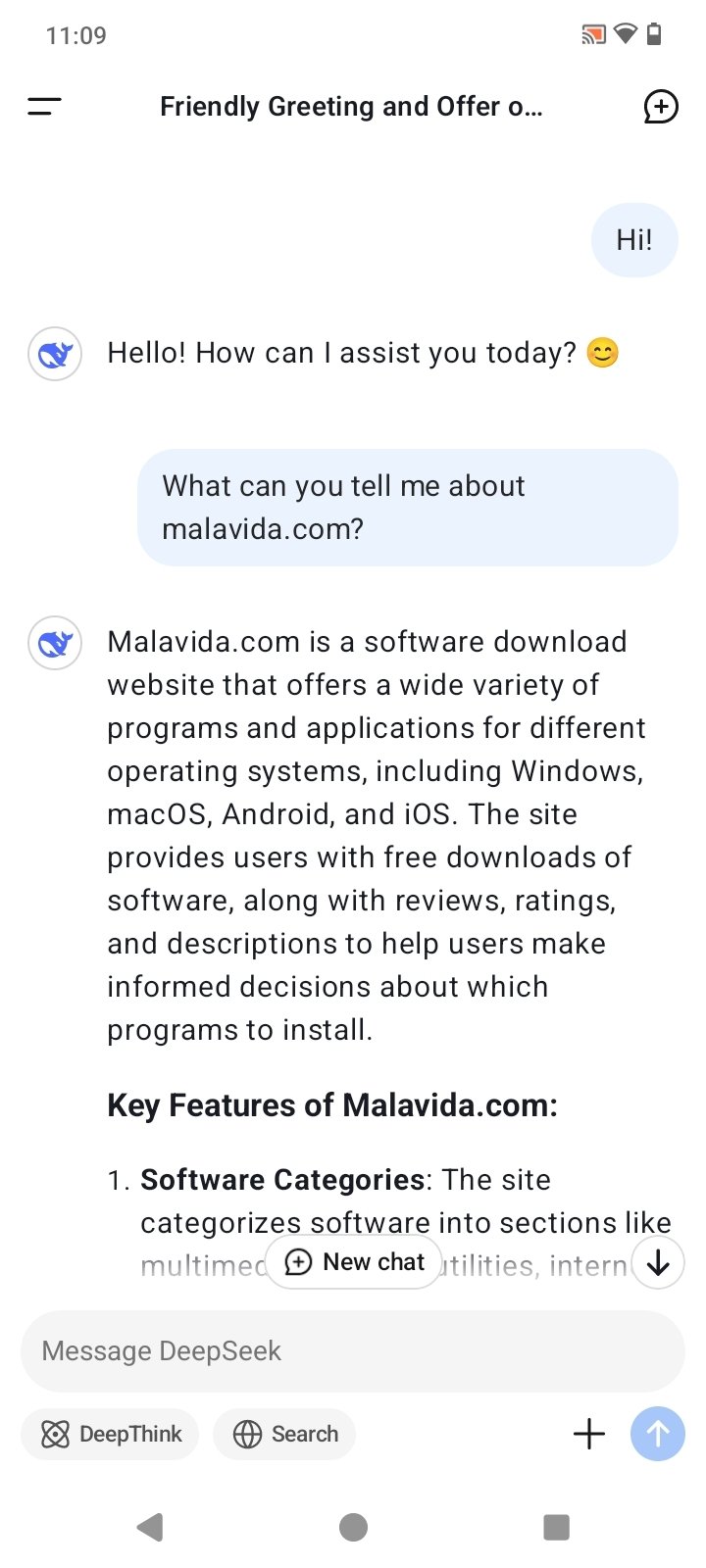

Its chat version additionally outperforms different open-supply fashions and achieves performance comparable to main closed-supply models, including GPT-4o and Claude-3.5-Sonnet, on a series of normal and open-ended benchmarks. DeepSeek for providing the AI-powered chat interface. DeepSeek is designed to supply customized suggestions based on users past behaviour, queries, context and sentiments. In the primary stage, the maximum context size is extended to 32K, and within the second stage, it is further extended to 128K. Following this, we conduct put up-coaching, together with Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) on the bottom mannequin of DeepSeek-V3, to align it with human preferences and further unlock its potential. Next, we conduct a two-stage context length extension for DeepSeek-V3. Meanwhile, we additionally maintain management over the output type and length of DeepSeek-V3. That is no longer a scenario the place one or two corporations management the AI area, now there's a huge global community which might contribute to the progress of those wonderful new tools.

It’s a starkly totally different means of working from established internet companies in China, the place teams are often competing for sources. To further push the boundaries of open-supply mannequin capabilities, we scale up our fashions and introduce DeepSeek-V3, a large Mixture-of-Experts (MoE) model with 671B parameters, of which 37B are activated for each token. What are the psychological fashions or frameworks you employ to think in regards to the gap between what’s accessible in open source plus fantastic-tuning as opposed to what the main labs produce? Our group is about connecting individuals by means of open and considerate conversations. Yes, DeepSeek helps optimize native Seo by analyzing location-particular search trends, key phrases, and competitor knowledge, enabling businesses to focus on hyperlocal audiences and enhance rankings in native search outcomes. Businesses can detect rising search trends early, allowing them to create well timed, excessive-ranking content material. With the at all times-being-evolved course of of these fashions, the users can count on consistent improvements of their very own alternative of AI instrument for implementation, thus enhancing the usefulness of these tools for the long run. The best possible Situation is while you get harmless textbook toy examples that foreshadow future real problems, and so they are available in a field actually labeled ‘danger.’ I'm absolutely smiling and laughing as I write this.

It’s a starkly totally different means of working from established internet companies in China, the place teams are often competing for sources. To further push the boundaries of open-supply mannequin capabilities, we scale up our fashions and introduce DeepSeek-V3, a large Mixture-of-Experts (MoE) model with 671B parameters, of which 37B are activated for each token. What are the psychological fashions or frameworks you employ to think in regards to the gap between what’s accessible in open source plus fantastic-tuning as opposed to what the main labs produce? Our group is about connecting individuals by means of open and considerate conversations. Yes, DeepSeek helps optimize native Seo by analyzing location-particular search trends, key phrases, and competitor knowledge, enabling businesses to focus on hyperlocal audiences and enhance rankings in native search outcomes. Businesses can detect rising search trends early, allowing them to create well timed, excessive-ranking content material. With the at all times-being-evolved course of of these fashions, the users can count on consistent improvements of their very own alternative of AI instrument for implementation, thus enhancing the usefulness of these tools for the long run. The best possible Situation is while you get harmless textbook toy examples that foreshadow future real problems, and so they are available in a field actually labeled ‘danger.’ I'm absolutely smiling and laughing as I write this.

A yr after ChatGPT’s launch, the Generative AI race is stuffed with many LLMs from numerous firms, all trying to excel by offering the best productivity instruments. It leads the charts amongst open-supply fashions and competes closely with the best closed-supply fashions worldwide. Taking a look at the individual circumstances, we see that while most fashions may provide a compiling test file for simple Java examples, the very same models often failed to provide a compiling take a look at file for Go examples. This overlap ensures that, because the mannequin further scales up, as long as we maintain a relentless computation-to-communication ratio, we are able to nonetheless make use of high quality-grained specialists across nodes while attaining a close to-zero all-to-all communication overhead. Tesla nonetheless has a first mover advantage for sure. Etc and so forth. There might literally be no advantage to being early and every advantage to waiting for LLMs initiatives to play out. Period. Deepseek is just not the difficulty you ought to be watching out for imo. 1. Obtain your API key from the DeepSeek Developer Portal.

If you are you looking for more regarding شات ديب سيك stop by the web-page.

댓글목록

등록된 댓글이 없습니다.