When Deepseek China Ai Companies Grow Too Rapidly

페이지 정보

작성자 Christopher Min… 작성일25-02-22 05:44 조회11회 댓글0건관련링크

본문

We remain positive on lengthy-term AI computing demand development as an extra lowering of computing/training/inference costs might drive higher AI adoption. Explore competitors’ web site site visitors stats, uncover progress points, and expand your market share. But past the market shock and frenzy it precipitated, DeepSeek’s story holds valuable classes-particularly for authorized professionals navigating this rapidly-evolving landscape. Its open-source nature, paired with robust neighborhood adoption, makes it a precious tool for builders and AI practitioners on the lookout for an accessible but powerful LLM. DeepSeek LLM was the corporate's first normal-objective large language model. Which LLM mannequin is best for producing Rust code? The market hit got here as investors quickly adjusted bets on AI, after DeepSeek's claim that its model was made at a fraction of the cost of those of its rivals. The platform hit the 10 million person mark in just 20 days - half the time it took ChatGPT to reach the identical milestone.

We remain positive on lengthy-term AI computing demand development as an extra lowering of computing/training/inference costs might drive higher AI adoption. Explore competitors’ web site site visitors stats, uncover progress points, and expand your market share. But past the market shock and frenzy it precipitated, DeepSeek’s story holds valuable classes-particularly for authorized professionals navigating this rapidly-evolving landscape. Its open-source nature, paired with robust neighborhood adoption, makes it a precious tool for builders and AI practitioners on the lookout for an accessible but powerful LLM. DeepSeek LLM was the corporate's first normal-objective large language model. Which LLM mannequin is best for producing Rust code? The market hit got here as investors quickly adjusted bets on AI, after DeepSeek's claim that its model was made at a fraction of the cost of those of its rivals. The platform hit the 10 million person mark in just 20 days - half the time it took ChatGPT to reach the identical milestone.

DeepSeek, launched in January 2025, took a slightly completely different path to success. Apple CEO Tim Cook shared some temporary thoughts on DeepSeek during the January 30, 2025, earnings call. On January 20, on the same day as Donald Trump’s inauguration in Washington, Chinese premier Li Qiang held a gathering with experts to seek the advice of on the Chinese government’s policies for the yr ahead. William Blair companion and software program analyst Arjun Bhatia thinks that lower than 10% of that quantity are paying customers-but in addition says the a number of must be utilized to DeepSeek’s consumer rely a year or two from now. JPMorgan analyst Harlan Sur and Citi analyst Christopher Danley stated in separate notes to buyers that because DeepSeek used a process known as "distillation" - in different words, it relied on Meta’s (META) open-source Llama AI mannequin to develop its model - the low spending cited by the Chinese startup (under $6 billion to train its recent V3 model) didn't totally encompass its costs. It will be interesting to see how different AI chatbots alter to DeepSeek’s open-supply launch and rising reputation, and whether the Chinese startup can continue growing at this charge. Now, let’s see what MoA has to say about something that has occurred inside the last day or two…

DeepSeek, launched in January 2025, took a slightly completely different path to success. Apple CEO Tim Cook shared some temporary thoughts on DeepSeek during the January 30, 2025, earnings call. On January 20, on the same day as Donald Trump’s inauguration in Washington, Chinese premier Li Qiang held a gathering with experts to seek the advice of on the Chinese government’s policies for the yr ahead. William Blair companion and software program analyst Arjun Bhatia thinks that lower than 10% of that quantity are paying customers-but in addition says the a number of must be utilized to DeepSeek’s consumer rely a year or two from now. JPMorgan analyst Harlan Sur and Citi analyst Christopher Danley stated in separate notes to buyers that because DeepSeek used a process known as "distillation" - in different words, it relied on Meta’s (META) open-source Llama AI mannequin to develop its model - the low spending cited by the Chinese startup (under $6 billion to train its recent V3 model) didn't totally encompass its costs. It will be interesting to see how different AI chatbots alter to DeepSeek’s open-supply launch and rising reputation, and whether the Chinese startup can continue growing at this charge. Now, let’s see what MoA has to say about something that has occurred inside the last day or two…

By day 40, ChatGPT was serving 10 million users. Given these developments, customers are suggested to train warning. What are their goals? One flaw proper now could be that a few of the video games, particularly NetHack, are too arduous to influence the rating, presumably you’d want some sort of log score system? The AI house is arguably the fastest-growing trade right now. Their AI models rival industry leaders like OpenAI and Google however at a fraction of the cost. DeepSeek shook the trade final week with the release of its new open-supply mannequin called DeepSeek-R1, which matches the capabilities of leading chatbots like ChatGPT and Microsoft Copilot. Probably the most easy way to access DeepSeek chat is through their net interface. While OpenAI's o1 maintains a slight edge in coding and factual reasoning duties, DeepSeek-R1's open-source access and low costs are interesting to customers. It was educated on 87% code and 13% pure language, offering Free DeepSeek Chat open-supply entry for analysis and industrial use. With an MIT license, Janus Pro 7B is freely accessible for both tutorial and commercial use, accessible via platforms like Hugging Face and GitHub. Between work deadlines, household tasks, and the limitless stream of notifications in your phone, it’s easy to feel like you’re barely holding your head above water.

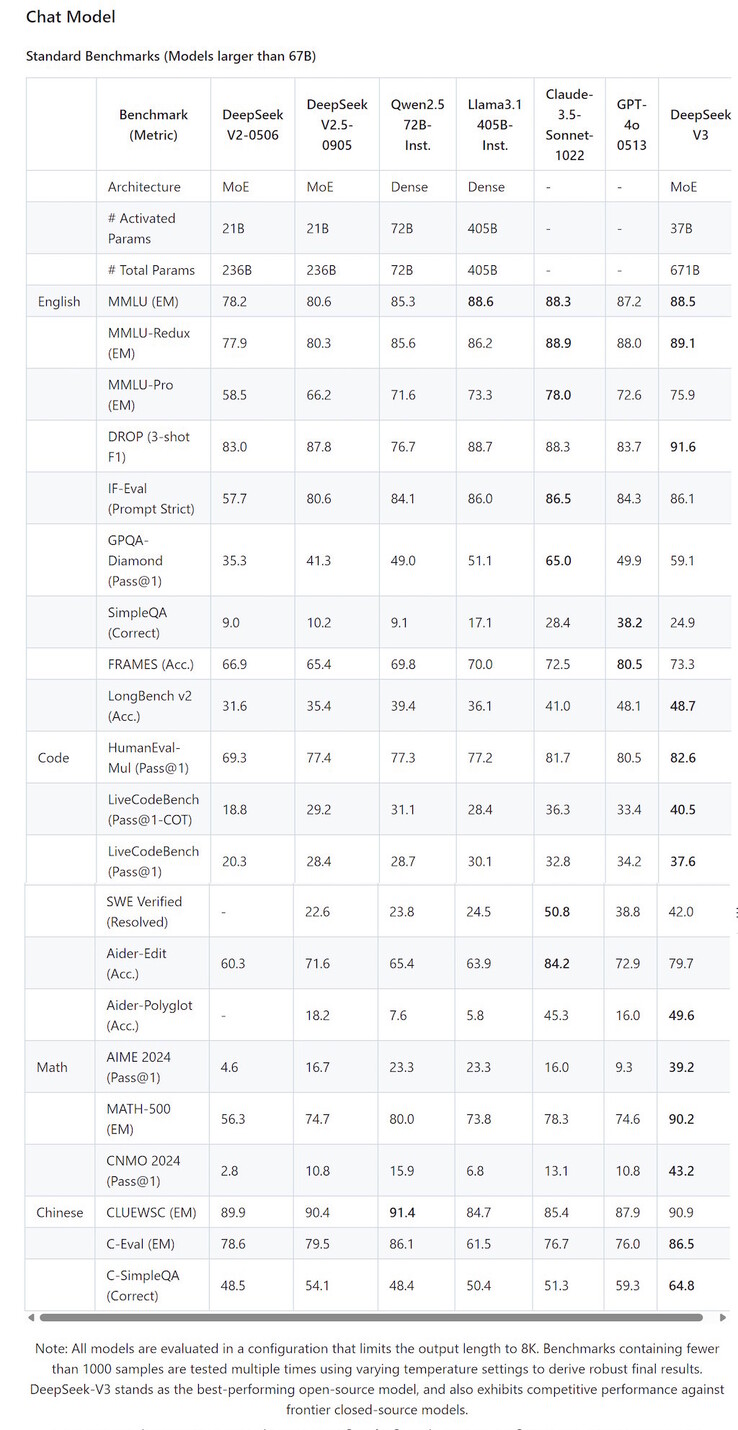

On AIME 2024, it scores 79.8%, barely above OpenAI o1-1217's 79.2%. This evaluates advanced multistep mathematical reasoning. OpenAI has been the undisputed chief within the AI race, but DeepSeek has lately stolen a number of the spotlight. America have to be "laser-focused" on profitable the synthetic intelligence race, says U.S. People on reverse sides of U.S. How Many people Use DeepSeek? Despite these concerns, banning DeepSeek may very well be challenging as a result of it's open-supply. Liang Wenfeng is the founder and CEO of DeepSeek. What to Know Concerning the 40-Year Old Billionaire Wenfeng beforehand ran a hedge fund with $14 billion in property. It featured 236 billion parameters, a 128,000 token context window, and support for 338 programming languages, to handle more advanced coding tasks. The model has 236 billion whole parameters with 21 billion active, considerably bettering inference efficiency and training economics. The opposite noticeable difference in prices is the pricing for every mannequin.

댓글목록

등록된 댓글이 없습니다.