The Commonest Mistakes People Make With Deepseek

페이지 정보

작성자 Janet 작성일25-02-22 07:46 조회4회 댓글0건관련링크

본문

DeepSeek V3 was unexpectedly released just lately. 600B. We can not rule out larger, higher models not publicly launched or introduced, after all. They launched all the mannequin weights for V3 and R1 publicly. The paper says that they tried making use of it to smaller models and it did not work almost as properly, so "base fashions had been dangerous then" is a plausible clarification, but it's clearly not true - GPT-4-base is probably a typically higher (if costlier) model than 4o, which o1 is predicated on (might be distillation from a secret bigger one although); and LLaMA-3.1-405B used a somewhat similar postttraining course of and is about nearly as good a base mannequin, but will not be aggressive with o1 or R1. Is this just because GPT-4 benefits heaps from posttraining whereas DeepSeek Chat evaluated their base model, or is the mannequin still worse in some hard-to-take a look at manner? They have, by far, the best mannequin, by far, the best entry to capital and GPUs, and they've the very best people.

I don’t really see a lot of founders leaving OpenAI to start out one thing new as a result of I believe the consensus inside the company is that they're by far the very best. Building another one can be another $6 million and so forth, the capital hardware has already been purchased, you are now just paying for the compute / energy. What has changed between 2022/23 and now which means we've got not less than three first rate long-CoT reasoning models around? It’s a strong mechanism that permits AI models to focus selectively on essentially the most related parts of enter when performing tasks. We tried. We had some concepts that we needed individuals to leave these companies and start and it’s really laborious to get them out of it. You see an organization - people leaving to start those kinds of companies - but outdoors of that it’s laborious to persuade founders to depart. There’s not leaving OpenAI and saying, "I’m going to start an organization and dethrone them." It’s type of loopy.

I don’t really see a lot of founders leaving OpenAI to start out one thing new as a result of I believe the consensus inside the company is that they're by far the very best. Building another one can be another $6 million and so forth, the capital hardware has already been purchased, you are now just paying for the compute / energy. What has changed between 2022/23 and now which means we've got not less than three first rate long-CoT reasoning models around? It’s a strong mechanism that permits AI models to focus selectively on essentially the most related parts of enter when performing tasks. We tried. We had some concepts that we needed individuals to leave these companies and start and it’s really laborious to get them out of it. You see an organization - people leaving to start those kinds of companies - but outdoors of that it’s laborious to persuade founders to depart. There’s not leaving OpenAI and saying, "I’m going to start an organization and dethrone them." It’s type of loopy.

You do one-on-one. And then there’s the whole asynchronous part, which is AI brokers, copilots that give you the results you want within the background. But then again, they’re your most senior people because they’ve been there this whole time, spearheading DeepMind and building their group. There is far energy in being approximately right very fast, and it accommodates many intelligent methods which aren't instantly apparent however are very powerful. Note that during inference, we straight discard the MTP module, so the inference prices of the compared models are precisely the same. Key innovations like auxiliary-loss-free load balancing MoE,multi-token prediction (MTP), as well a FP8 mix precision training framework, made it a standout. I feel like this is just like skepticism about IQ in people: a form of defensive skepticism about intelligence/capability being a driving drive that shapes outcomes in predictable methods. It permits you to look the web using the same type of conversational prompts that you simply normally engage a chatbot with. Do all of them use the identical autoencoders or one thing? OpenAI just lately rolled out its Operator agent, which may effectively use a computer in your behalf - in the event you pay $200 for the pro subscription.

You do one-on-one. And then there’s the whole asynchronous part, which is AI brokers, copilots that give you the results you want within the background. But then again, they’re your most senior people because they’ve been there this whole time, spearheading DeepMind and building their group. There is far energy in being approximately right very fast, and it accommodates many intelligent methods which aren't instantly apparent however are very powerful. Note that during inference, we straight discard the MTP module, so the inference prices of the compared models are precisely the same. Key innovations like auxiliary-loss-free load balancing MoE,multi-token prediction (MTP), as well a FP8 mix precision training framework, made it a standout. I feel like this is just like skepticism about IQ in people: a form of defensive skepticism about intelligence/capability being a driving drive that shapes outcomes in predictable methods. It permits you to look the web using the same type of conversational prompts that you simply normally engage a chatbot with. Do all of them use the identical autoencoders or one thing? OpenAI just lately rolled out its Operator agent, which may effectively use a computer in your behalf - in the event you pay $200 for the pro subscription.

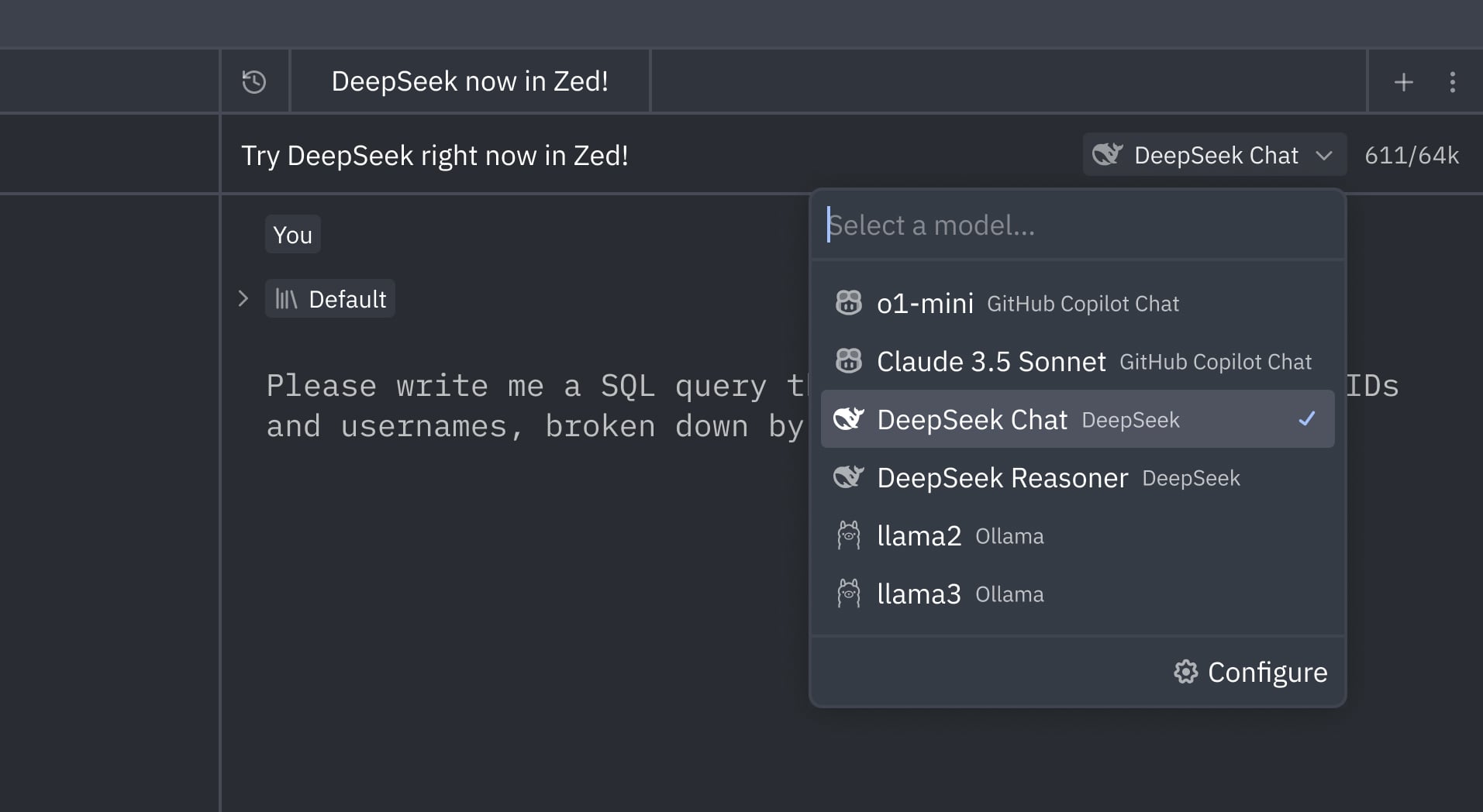

ChatGPT: requires a subscription to Plus or Pro for superior options. Furthermore, its collaborative features enable teams to share insights easily, fostering a culture of data sharing inside organizations. With its commitment to innovation paired with powerful functionalities tailored in direction of person experience; it’s clear why many organizations are turning in the direction of this leading-edge answer. Developers at leading AI companies in the US are praising the DeepSeek Chat AI models which have leapt into prominence while additionally attempting to poke holes in the notion that their multi-billion dollar technology has been bested by a Chinese newcomer's low-price different. Why it issues: Between QwQ and DeepSeek Chat, open-source reasoning fashions are right here - and Chinese companies are completely cooking with new models that nearly match the current high closed leaders. Customers at the moment are building manufacturing-prepared AI applications with Azure AI Foundry, whereas accounting for their various security, security, and privacy requirements. I believe what has possibly stopped more of that from taking place right now is the companies are nonetheless doing nicely, particularly OpenAI. 36Kr: What are the essential standards for recruiting for the LLM workforce?

댓글목록

등록된 댓글이 없습니다.