Ten Awesome Tips On Deepseek Chatgpt From Unlikely Sources

페이지 정보

작성자 Trinidad 작성일25-02-22 10:28 조회21회 댓글0건관련링크

본문

This could have vital implications for fields like arithmetic, computer science, and past, by helping researchers and drawback-solvers find options to difficult issues more effectively. This progressive approach has the potential to significantly speed up progress in fields that rely on theorem proving, such as arithmetic, computer science, and past. This can be a Plain English Papers summary of a analysis paper referred to as DeepSeek online-Prover advances theorem proving by means of reinforcement learning and Monte-Carlo Tree Search with proof assistant feedbac. Monte-Carlo Tree Search: DeepSeek-Prover-V1.5 employs Monte-Carlo Tree Search to effectively explore the area of doable options. Free Deepseek Online chat-Prover-V1.5 goals to deal with this by combining two highly effective strategies: reinforcement learning and Monte-Carlo Tree Search. This suggestions is used to replace the agent's coverage and guide the Monte-Carlo Tree Search process. DeepSeek-Prover-V1.5 is a system that combines reinforcement studying and Monte-Carlo Tree Search to harness the feedback from proof assistants for improved theorem proving. This feedback is used to update the agent's coverage, guiding it in direction of extra profitable paths. We will keep extending the documentation but would love to listen to your enter on how make sooner progress in direction of a extra impactful and fairer analysis benchmark! Additionally, this benchmark exhibits that we aren't yet parallelizing runs of particular person fashions.

This could have vital implications for fields like arithmetic, computer science, and past, by helping researchers and drawback-solvers find options to difficult issues more effectively. This progressive approach has the potential to significantly speed up progress in fields that rely on theorem proving, such as arithmetic, computer science, and past. This can be a Plain English Papers summary of a analysis paper referred to as DeepSeek online-Prover advances theorem proving by means of reinforcement learning and Monte-Carlo Tree Search with proof assistant feedbac. Monte-Carlo Tree Search: DeepSeek-Prover-V1.5 employs Monte-Carlo Tree Search to effectively explore the area of doable options. Free Deepseek Online chat-Prover-V1.5 goals to deal with this by combining two highly effective strategies: reinforcement learning and Monte-Carlo Tree Search. This suggestions is used to replace the agent's coverage and guide the Monte-Carlo Tree Search process. DeepSeek-Prover-V1.5 is a system that combines reinforcement studying and Monte-Carlo Tree Search to harness the feedback from proof assistants for improved theorem proving. This feedback is used to update the agent's coverage, guiding it in direction of extra profitable paths. We will keep extending the documentation but would love to listen to your enter on how make sooner progress in direction of a extra impactful and fairer analysis benchmark! Additionally, this benchmark exhibits that we aren't yet parallelizing runs of particular person fashions.

1.9s. All of this might sound pretty speedy at first, however benchmarking simply seventy five models, with forty eight instances and 5 runs each at 12 seconds per activity would take us roughly 60 hours - or over 2 days with a single course of on a single host. With the new cases in place, having code generated by a mannequin plus executing and scoring them took on common 12 seconds per mannequin per case. DeepSeekMath: Pushing the limits of Mathematical Reasoning in Open Language and AutoCoder: Enhancing Code with Large Language Models are related papers that explore similar themes and developments in the field of code intelligence. Gemini 1.5 Pro additionally illustrated one among the important thing themes of 2024: increased context lengths. A bunch of independent researchers - two affiliated with Cavendish Labs and MATS - have give you a very laborious take a look at for the reasoning abilities of imaginative and prescient-language models (VLMs, like GPT-4V or Google’s Gemini). Second only to OpenAI’s o1 mannequin within the Artificial Analysis Quality Index, a effectively-adopted independent AI analysis rating, R1 is already beating a variety of different models together with Google’s Gemini 2.0 Flash, Anthropic’s Claude 3.5 Sonnet, Meta’s Llama 3.3-70B and OpenAI’s GPT-4o. Additionally, we eliminated older variations (e.g. Claude v1 are superseded by three and 3.5 fashions) as well as base models that had official superb-tunes that have been at all times better and wouldn't have represented the current capabilities.

1.9s. All of this might sound pretty speedy at first, however benchmarking simply seventy five models, with forty eight instances and 5 runs each at 12 seconds per activity would take us roughly 60 hours - or over 2 days with a single course of on a single host. With the new cases in place, having code generated by a mannequin plus executing and scoring them took on common 12 seconds per mannequin per case. DeepSeekMath: Pushing the limits of Mathematical Reasoning in Open Language and AutoCoder: Enhancing Code with Large Language Models are related papers that explore similar themes and developments in the field of code intelligence. Gemini 1.5 Pro additionally illustrated one among the important thing themes of 2024: increased context lengths. A bunch of independent researchers - two affiliated with Cavendish Labs and MATS - have give you a very laborious take a look at for the reasoning abilities of imaginative and prescient-language models (VLMs, like GPT-4V or Google’s Gemini). Second only to OpenAI’s o1 mannequin within the Artificial Analysis Quality Index, a effectively-adopted independent AI analysis rating, R1 is already beating a variety of different models together with Google’s Gemini 2.0 Flash, Anthropic’s Claude 3.5 Sonnet, Meta’s Llama 3.3-70B and OpenAI’s GPT-4o. Additionally, we eliminated older variations (e.g. Claude v1 are superseded by three and 3.5 fashions) as well as base models that had official superb-tunes that have been at all times better and wouldn't have represented the current capabilities.

Improved code understanding capabilities that allow the system to higher comprehend and reason about code. It highlights the important thing contributions of the work, together with developments in code understanding, technology, and enhancing capabilities. These advancements are showcased by way of a series of experiments and benchmarks, which exhibit the system's sturdy efficiency in varied code-associated duties. This demonstrates that clever engineering and algorithmic developments can typically overcome limitations in computational sources. If the proof assistant has limitations or biases, this might influence the system's capability to be taught effectively. Within the context of theorem proving, the agent is the system that is trying to find the solution, and the suggestions comes from a proof assistant - a computer program that may confirm the validity of a proof. Proof Assistant Integration: The system seamlessly integrates with a proof assistant, which supplies suggestions on the validity of the agent's proposed logical steps. Reinforcement studying is a sort of machine studying where an agent learns by interacting with an environment and receiving feedback on its actions. Chatsonic is an Seo AI Agent that’s designed particularly for Seo and advertising and marketing use cases.

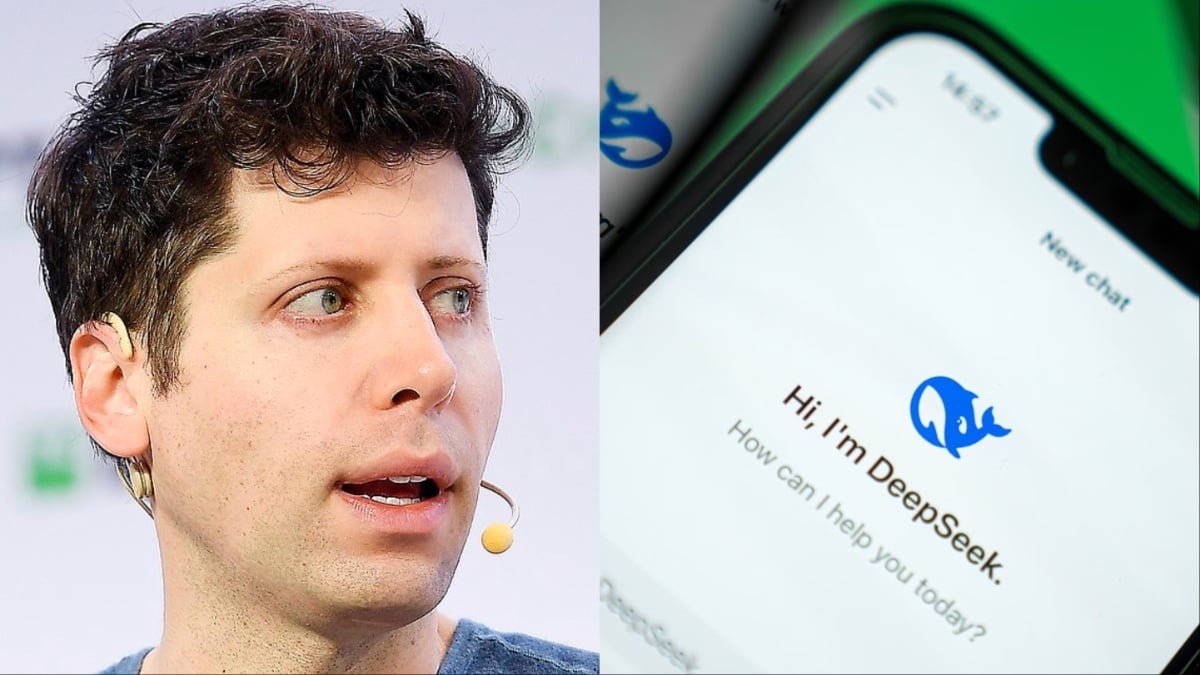

As talked about above, there may be little strategic rationale within the United States banning the export of HBM to China if it will proceed selling the SME that native Chinese companies can use to supply advanced HBM. Free DeepSeek Ai Chat, a modest Chinese startup, has managed to shake up established giants reminiscent of OpenAI with its open-source R1 model. JPMorgan analyst Harlan Sur and Citi analyst Christopher Danley said in separate notes to traders that as a result of DeepSeek used a process referred to as "distillation" - in other words, it relied on Meta’s (META) open-source Llama AI mannequin to develop its mannequin - the low spending cited by the Chinese startup (below $6 billion to train its current V3 model) did not absolutely encompass its costs. AI. Last week, President Donald Trump introduced a joint project with OpenAI, Oracle, and Softbank known as Stargate that commits up to $500 billion over the next 4 years to data centers and other AI infrastructure. Testing: Google tested out the system over the course of 7 months throughout 4 workplace buildings and with a fleet of at times 20 concurrently managed robots - this yielded "a collection of 77,000 real-world robotic trials with each teleoperation and autonomous execution".

If you have any inquiries relating to the place and how to use DeepSeek Chat, you can get hold of us at our web-site.

댓글목록

등록된 댓글이 없습니다.